AI isn’t just advancing industries; it’s redefining the cybersecurity battleground. While businesses embrace AI for innovation, cybercriminals are weaponizing it to launch more complex, large-scale attacks. These aren’t distant threats—they’re happening now. Bad actors have been early AI adopters for adversarial purposes, pushing most enterprises back on their heels as they’ve lagged in their deployment of defensive AI due to many factors, including model governance restrictions and laborious corporate approval processes. Attackers have a nearly three-year head start on most cybersecurity programs, based on observations from our threat research unit, ACTIR.

This urgency led us to ask: Just how mature are enterprises' use of AI-resistant solutions in protecting business operations and consumer accounts against AI-powered attacks?

That question sparked a seminal research project on the topic. We teamed up with the renowned research firm KS&R to survey and conduct in-depth interviews with cybersecurity executives from U.S. enterprises with more than $100 million in annual revenue. As a result of this research, we’ve uncovered how AI is shaping enterprises’ offensive and defensive strategies today—and what they’re planning for tomorrow.

The findings are clear: While many companies are experiencing more frequent and intense AI-powered threats, few are fully equipped to use AI to fight back. The good news is our research found outliers in the conundrum, and we’ve coined them “AI Enthusiasts;” their use of AI is more mature than other enterprises participating in our survey. I will share more insights from these outliers a little later. This blog covers the highlights of the results. We’ve prepared different reports from the data that you can download to learn more (see the additional resources below).

Survey Highlights

Considering responses from the total sample pool of cybersecurity professionals, we found that of all types of attacks enterprises experienced over the last year, 40% were AI-powered bots, 36% were basic bots and 24% were low-and-slow human fraud farm attacks.

Digging deeper, 88% of enterprises saw an increase in AI-powered bot attacks in the last two years, with a majority of surveyed businesses expressing serious concern about a variety of threats to their critical business applications, like revenue-driving sites and apps.

Given these two dynamics, it surprised us that relatively few enterprises demonstrate full preparedness and resilience against the surge of AI-powered threats they know they face today. What’s even more concerning to consider is that the AI-powered attacks enterprises face today are just the start. These attacks are only going to become more advanced as AI matures.

Account takeovers/credential stuffing and fake account creation are the attack types that pose the greatest concern to the companies surveyed, closely followed by generative AI-based threats that can include deepfakes, LLM platform abuse—a vector that creates unauthorized platform replicas and uses illegal reverse proxying that copies the platform’s insights—and GPT prompt compromise, where bots are able to programmatically submit prompts and scrape the response with an intention to either train their own models, resell similar services or gain access to proprietary, confidential and personal information.

Despite high levels of concern across the board, the survey revealed that different threat types lead to different levels of concern for each sector. For example, 81% of fintech businesses are worried about generative AI-based threats, compared with 62% of banks and 71% of airlines.

Differing Levels of Preparedness

The labor shortage in cybersecurity has been well documented. But an even starker reality is starting to emerge—the talent gap has grown into a chasm, as not only do all enterprises need top talent with cybersecurity skills but also AI skills.

And that combination is rare indeed. The research shows that 51% of all respondents don’t have enough talent with both skills, and 71% of airlines do not have enough personnel with the combination of AI and cybersecurity expertise. For the hotels and banks surveyed, approximately half don’t have the needed personnel, and 44% of fintechs signaled the same. This is reality, as 40% of all attacks on enterprises in the last year have been AI-powered, volumetric, sophisticated attacks.

Further, fintech companies along with technology platforms reported among the highest levels of preparedness for defending against volumetric AI-powered attacks. Nearly four-fifths of fintechs and banks are leveraging AI to enable faster response time to security incidents, compared with 57% of airlines.

When asked about what level of preparedness they felt for using AI to defend against bad actors, airlines (14%) were the most likely to report not being at all prepared, while fintechs (30%), and technology companies (30%) were among the industries most likely to report being highly prepared.

Utilization of Adversarial AI-Resistant Solutions

Cybersecurity executives aren’t in this battle alone. They invest heavily in trusted vendor partnerships. We asked them about the importance of various characteristics they consider when considering a vendor to support their bot management and account security needs.

Overwhelmingly, all respondents indicated they seek use of AI within the vendor’s solutions (48%) and efficacy in detecting and mitigating threats that are unique to their industry (48%) when considering vendors to support their bot management and account security needs.

The data reveals a critical need for vendors to provide AI-resistant solutions that can match agile fraudsters, who are constantly refining adversarial AI to launch large-scale attacks.

Arkose Labs is recognized as a leader in this space. Our solution was the first cyber risk and fraud intelligence platform to roll out protections for enterprise GPT applications and the first to develop challenge solutions resistant to adversarial AI bot attacks. We’ve seen a lot!

For financially motivated cybercriminals, bots are the most efficient tool, driving higher ROI on attacks. As AI-powered bot use started to emerge, we continued to roll out innovations through Arkose MatchKey, which is a solution suite that includes AI-resistant challenges designed to detect and stop AI-powered bots from bypassing security controls. By continuously testing MatchKey against computer vision and machine learning technologies, we’ve refined techniques that force fraudsters to constantly update their models, raising their costs and cutting into their attack profits. When fraudsters can’t achieve high returns, they move on to easier targets.

The rise of multimodal language models (M-LLMs) , which are advanced AI models that can process and understand multiple types of data like text, images and audio simultaneously, adds a new layer to the threat landscape. We’ve been working with category leaders in generative AI to understand how fraudsters use these models to evade detection and crack security challenges. Our ongoing efforts include evaluating M-LLMs' ability to interpret Arkose MatchKey challenges, analyzing their problem-solving approaches, and incorporating these insights into product design, resulting in high solutions with high efficacy.

In a recent A/B test, fewer than 1% of AI-powered bots cracked our AI-resistant challenges, compared to over 92% success with non-AI-resistant solutions. Beyond stopping attacks, our challenges enhance user experience by offering engaging alternatives to traditional methods. This blend of AI-forward security and usability solidifies us as a leader helping our customers combat adversarial AI. As AI becomes more accessible, the risk of misuse rises exponentially, making AI-resistant solutions essential for safeguarding your revenue-driving sites and apps. If you want to learn more about these efforts, let’s set up a one-on-meeting (my direct email at the end of this blog).

Actions AI Enthusiasts Can Teach Other Enterprises

As we analyzed the massive dataset from this survey, a pattern became clear. Some respondents are already heavily using AI to detect and mitigate bots and ensure consumer account integrity. We’ve coined the term AI Enthusiasts to identify this group of enterprises and learn from them. AI Enthusiasts are already taking three or more of the following actions to a large extent:

- Using AI to analyze historical data and identify vulnerabilities

- Using AI to predict future security threats

- Automating processes with AI to reduce manual tasks

- Leveraging AI to enable faster response time to security incidents

- Deploying AI tools to continuously monitor infrastructure

- Analyzing cybersecurity data in real time with AI tools

These represent the top quartile of enterprises undertaking AI actions.

As a result of harnessing AI, AI Enthusiasts report they are very well prepared to defend against bad actors conducting volumetric AI-powered attacks. These enterprises are 3.5x more likely to be very well prepared for defending against volumetric attacks compared to their peers.

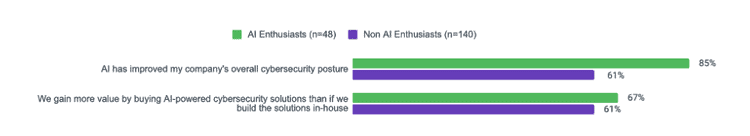

With any security solution decision, cybersecurity professionals have to weigh the benefits of building their own solutions or investing in solutions already created by reputable vendor partners. When asked, AI Enthusiasts indicated that they have gained more value from buying AI-powered solutions than building their own.

Time to Ditch the Red Tape

As enterprises scramble to skill-up on their AI defenses, they are often at a disadvantage compared to fast-moving cybercriminals. Enterprises must typically overcome internal blockages and red tape such as budget limitations and multiple layers of sign-off before rolling out an effective new security program. As Datos Insights Chief Insights Officer Julie Conroy said in a recent webinar with Arkose Labs, bad actors don’t have to go through a maze of approvals and model governance policies to experiment and use AI.

The research results discussed in this blog are just the tip of the iceberg. Below I’ve linked to the reports where you can access the full results and commentary. We’re operating in the AI economy, and cybersecurity teams must harness AI solutions that are resistant to adversarial AI to protect their business operations and consumers.

As mentioned, you can contact v.shetty@arkoselabs.com to set up one-on-one time to discuss the challenges you’re experiencing. Together, we can find the right solution.

To see the full research: