Arkose Labs recently reviewed various 2023 security reports, confirming that phishing attacks are “now the most common form of cybercrime, with an estimated 3.5 billion spam emails sent every day.” Phishing has been with us for decades and consumers and employees fall for it daily. And what happens—customers give up the credentials for their banking accounts and employees click a link that allows malware to enter the employee’s company data center and sensitive data is exfiltrated and/or the company becomes a victim of ransomware. And we just can’t seem to get people to stop being phished.

As bad as it has been, it’s about to get worse. Welcome to the world of phishing and artificial intelligence (AI), especially generative AI (GenAI). This latest phishing scare has created a whirlwind of potential new and powerful capabilities. Now, with any new technology, there is always a heavy dose of skepticism that comes with it. But it has been 14 months, the jury is in, and has voted that GenAI is real for many use cases- and not all for the good. So, let’s take some time to understand what has happened and what is possible. Here are some of the documented capabilities of the new AI:

- Easily translate text from one language to many languages, with incredible fluency.

- Write text and create a graphic.

- Write code to say, create a website or other applications.

- Convert a photo into a video and have the mouth and facial movement look realistic.

- Capture 3-10 seconds of a person’s audio and create an entire speech in that person’s voice (in possibly many languages). And the accent will sound good in these languages.

- Use a video of one person (a fraudster) to create video motion of a second person (an attractive male for a romance scam), just having a picture of the person.

The Financial Times published an article in January 20241 that provided in depth analysis on how AI will increase financial scams. It is one of the better articles that stayed grounded in the facts of what has really been learned since GenAI was announced in late 2022. The article made a very key point: “The continued evolution and uptake of the technology (GenAI) means scammers do not just pose a threat to the unaware or vulnerable. Even cautious consumers are at risk of huge financial losses from AI-powered fraud.” This does not come as a surprise to me. I live in Silicon Valley, a wealthy and talented area. Yet, Santa Clara County (Palo Alto/San Jose area) Deputy District Attorney Erin West tells me every week very smart people are falling for online investment scams (call “pig butchering”) to the tune of hundreds of thousands of dollars in losses. And this is even before there is much GenAI used as part of the fraud.

What this new technology allows in effect is much better impersonation fraud, where the “scammers pretend to be anything from a prospective romantic partner to a family member in crisis2” to a company vendor, the CEO or another business associate. In fact, recently Rachel Tobac, an expert in social engineering, impersonated an associate of a billionaire to hack the billionaire’s PC via a combination of previously hacked information, a voice impersonation call and a phishing email with a link3. Rachel called this person, using the spoofed phone number of a trusted associate, over a noisy line, to say an important email was just sent and please take care of this email. And what this really highlights is the ability for scammers to do more targeted attacks, called spear phishing. And note that Rachel’s ‘attack’ was in early 2022, BEFORE GenAI was introduced. It will be even easier for Rachel to repeat this ‘attack’ today.

What will also help to make phishing more credible is this new ability to write an email in one language and have it translated in other languages. The same will be true for voice. According to the Financial Times article2, “scammers will be able to use deepfakes to replicate people’s voices and accents in more languages, which could allow them to target more victims across borders.” We might be seeing this occur in the large scam compounds, with thousands of scammers (often victims themselves) in each compound, that exist in Asia for the purpose of romance and investment scams.

There is a lot more to talk about here, but let’s focus on phishing via email and text. We know the telco and email providers block large amounts of bogus text messages and email, but scam text messages and emails still get through. In looking at phishing against bank web sites, the fraudsters are able to thread the bogus text message in the same text thread as from the bank. The email can look just like the bank emails. The only difference is a slight variation in the email address (maybe a second ‘i’ or a Cyrillic character that looks like one of the characters in the real bank email address). And if the fraudster is using information from previously hacked data, the text message and email can look quite personalized. And the fraudster can send these messages/emails in any language and sound very correct (grammar, tone, etc.) thanks to GenAI. So, it is clear more people will be tricked to click the link in the text message and email. Even if only 1-2% click the link, the fraudster is quite happy.

So, what continues to be quite important is to have protection from phishing used to get credentials for financial institution (FI) web sites/mobile. There are two ways the fraudsters operate.

Method 1

The fraudster will copy an FI’s web site with the only change being the domain name. The domain name will be a ‘look-a-like’ domain (again one character off or a Cyrillic character used). The customer clicks the link on the text message or email and is taken to the fraudster’s bank website. The customer logs into this bogus website and the fraudster collects the credentials. Afterwards, the fraudster may bring up an error page or maybe even take the customer to the real bank website.

Method 2

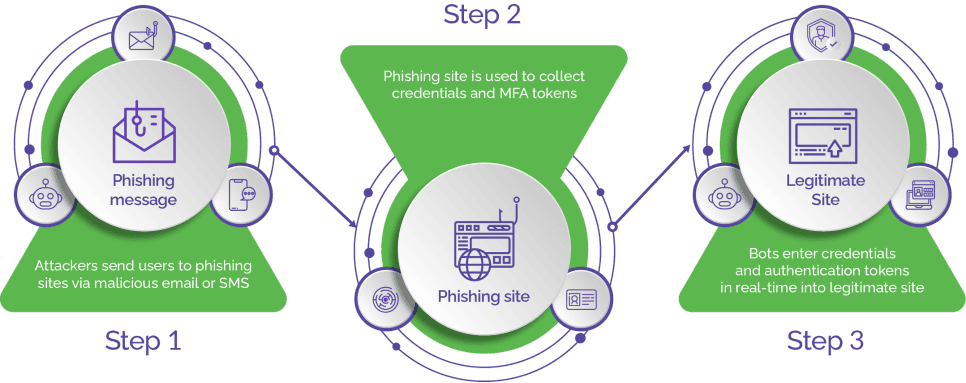

Method 2 is more complicated. Again, the fraudster copies the real bank website, but this time connects the bogus website to the real bank site. When the customer is taken to this bogus site, they enter their credentials. But this time, behind the scenes, the fraudster uses these credentials to successfully log into the real bank (a bot-type activity). If there is no additional authentication required (e.g. OTP code), the fraudster is in and can do unauthorized transactions. And the customer might be given a ‘site down’ message.

But if there is additional authentication required, such as the OTP code, the fraudster will take this OTP code page from the real site and present it to the customer on the bogus website. The customer gets a text message with the code and enters it into the bogus site. The bogus site then collects the OTP code and via a bot, the fraudster will enter the OTP code into the real site and again can now do transactions. Chart 1 describes how these man-in-the-middle attacks work, using reverse proxies. One of the more effective man-in-the-middle programs is called EvilProxy.

Chart 1 What is Man-in-the-Middle Phishing

According to Arkose Labs’ vice president of product Vikas Shetty4: “To make the reverse proxy attack more believable, the actor may obtain SSL/TLS certificates for the fake domain. This gives the appearance of a secure connection to the user”. Shetty goes on to say: “As users interact with the proxied fake site (bank, eCommerce, healthcare sites), the attacker collects sensitive information such as usernames, passwords, credit card details and any other data they’re targeting.”

He also describes how EvilProxy is a phishing-as-a -service model, where bad guys can just rent this service.

Summary

The new AI/GenAI capabilities will be picked up by the fraudsters to steal more money from frauds and scams. Although there will be several attack vectors fraudsters can use, for sure one of the most important and scalable attack vectors will be in phishing. And these new phishing attacks will be quite dangerous. Two final thoughts on how dangerous:

- The UK’s cybersecurity agency, the National Cyber Security Centre warns: “Artificial intelligence will make it difficult to spot whether emails are genuine or sent by scammers and malicious actors5”

- Security expert Roger Grimes, says what really scares him is: “when you see AI interact and correspond in conversation with very realistic-looking responses6.” He says when a victim is suspicious of an email, they may reply back asking questions. But the new AI will allow for well-crafted legitimate-looking responses to the victim using the ‘industry vernacular’ in the correspondence.

The accuracy and focus of the phishing messages will become really good, and in some cases, very targeted. Also, with the advanced capabilities to translate text into other languages, we will also see non-English speaking countries have more phishing. So, phishing will become a more international problem.

The good news is there are solutions to help protect against these two phishing methods. And some of these solutions revolve around bot protection, which can also be applicable to credential stuffing and online account opening threats. As an aside, as you read this blog and see what EvilProxy can do to bypass OTP codes as a step-up authenticator, ask yourself if it is also time to upgrade your authentication. In the interim, you will still need strong protection to prevent phishing attacks from being effective.

- “AI heralds the next generation of financial crime”, Siddarth Venkataramakrishan, Financial Times, 1/18/24

- “UK banks prepare for deepfake fraud wave”, Akila Quinio, Financial Times, 1/19/24

- “It Was Easy to Hack a Billionaire”, Rachel Tobac, https://lnkd.in/eYe8isty, March 12, 2022

- “Taking on EvilProxy: advancements in Phishing Protection”, Vikas Shetty, 1/17/24

- “AI will make scam emails look genuine, UK cybersecurity agency warns”, Dan Milno, 1/23/24

- “AI Does Not Scare Me. What Scares me about AI!”, Roger Grimes, 1/22/24