Online account opening is one of the most crucial functions for banks today. It is one of the key ways banks can grow. And customers don’t have to take the time to visit a branch to become a customer. At least two hours saved. It can be a real cost saver to a bank as well. The customer does all of the data entry. They pull out their driver’s license and show it to the camera on the phone or on the PC. The bank checks some data and vets the driver’s license and a new account is created. What could go wrong?

Threats to online account opening

Plenty can go wrong, as fraudsters have targeted online account opening as a key source of making money. And lots of it! Let’s first look at the threats to online account opening:

Stolen PII- There are tens of millions of records of customer PII available on the dark web.

- Synthetic IDs: Fraudsters have been creating IDs partially based on real data to launch synthetic identity theft (e.g., use dead person’s SSN and fabricate the rest of the PII).

- Frankenstein IDs: PII totally fabricated by GenAI (e.g., names, addresses, email address, phone number all looking legit but would not pass sound controls).

- Bogus applications: Thousands of them are being automatically created by bots.

- Human fraud farms: These help to create the accounts and bypassing controls like traditional CAPTCHAs.

- Bogus identity documents: This is much easier with GenAI to create documents and defeat liveness testing.

Let’s now look at one of the key ways that fraudsters can execute their account opening attack, using the threats above, to effectively attack online account opening. The best and cheapest way for the fraudster to execute this attack is via bad bots. Before we continue, let’s share a few definitions.

- Basic Bot: an automated script that can complete one or more computer functions (complete a 10-page account application).

- Intelligent Bot: a series of automated scripts that can complete a number of complex context aware computer functions (e.g., data entry for the application, modulate the data entry and solve CAPTCHA).

- Human Fraud Farms: people creating input for new accounts or solving the challenges of traditional CAPTCHAs. These are typically people in low-income countries earning $5 a day or, more recently, coerced labor forced under threats to do these activities. The coerced labor may be imprisoned in a patrolled fraud compound in Cambodia, Thailand, or other countries.

The combination of malicious bots and human fraud farms can be effective in generating thousands of new account applications. The fraudster is creating these new accounts for many purposes. Some of these purposes are:

- to create a money mule account to receive fraudulent funds.

- to establish an account to create a synthetic persona for future fraudulent activities.

Account opening in the wild

Now, let’s take an example of a fraudster wanting to open 5,000 new accounts. With the use of the bot scripts, the fraudster can be devious. First, they will make sure the device fingerprint (user agent string, size of monitor, etc.) is varied for the 5,000 applications. Next, they will send these applications over 5,000 different IP addresses (best are residential and mobile ISPs).

In order to send these applications across thousands of IP addresses, they need to have or rent a large number of compromised PCs. With cybercrime-as-a-service (CaaS), it is easy to buy the components you need to complete the attack, including bot PCs. As part of sending these applications, the fraudsters need to use what looks like PC and mobile devices. There are mobile emulators that sit virtually on PCs. So, in this case, the bot can sit in front of the mobile emulator. And the mobile emulator will define whether the mobile devices are Apple (sometimes more popular for banks) or Android, along with the varied device mobile fingerprints.

David Mouatt, VP at Arkose Labs, explains, “Fraud is a business and like any other business, they are looking for tools they can leverage to streamline and enhance their productivity. This has led to the rise of CaaS allowing the fraudsters to easily use GenAI and other tools without the need to build it themselves."

Third, bad actors will pace the applications over several hours so there is not a velocity alert of high volume in a short period of time. Fourth, they will have a number of sources of data to be used for the applications. It can be

- stolen PII

- synthetic ID data

- (newer) GenAI-created application data.

We are starting to see the use of GenAI in bogus new account opening. GenAI bots can create application data out of thin air. It can create names, addresses, SSNs, phone numbers and email addresses that on the surface look legitimate.

Gen AI on the scene

The bot scripts can be written to enter data over, say, 10 screens of input. Or with some fintechs I have seen, maybe just one-two screens of data. And with GenAI, I think soon we will see the scripts look to mimic the data entry to match a human’s data entry behavior field by field. So, as an example, a human, with long-term memory, enters the social security number in a certain fast way (3 digits- 2 digits- 4 digits). That is how it is stored in their memory.

If I am a fraudster, for training the GenAI, I might even set up a bogus website to get people to enter their PII and then let GenAI analyze the data entry. GenAI can also be used, instead of a human, to defeat traditional CAPTCHAs. Just have a script to send the CAPTCHA to GenAI to answer (e.g., where are the buses in these photos?)

The bot scripts can also automate the return information of all of these opened accounts. And test the new credentials to confirm the account is opened. The bots can also automatically add money to the new account, from, say a stolen credit card.

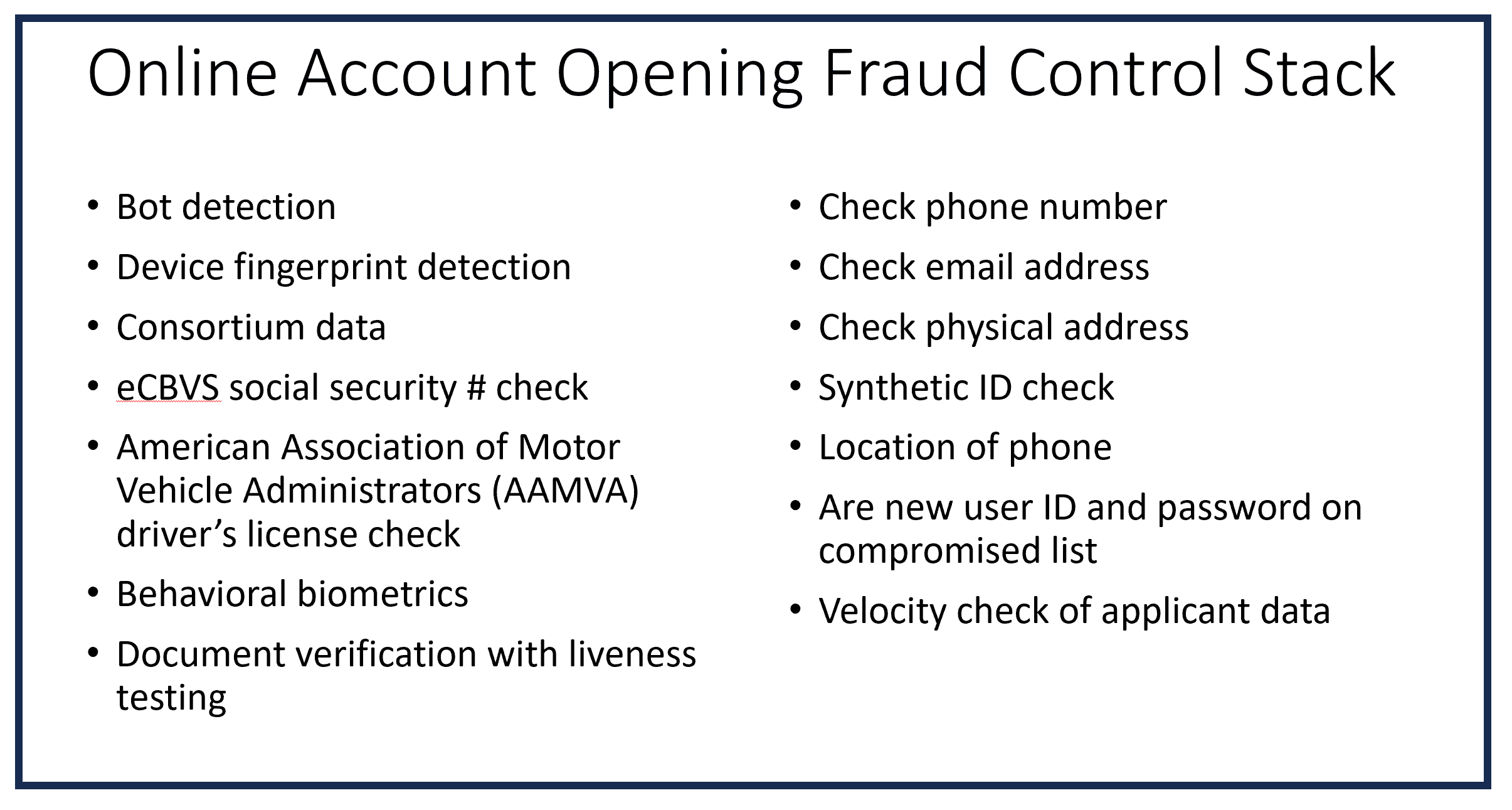

Now, depending on the size of the financial institution, you might have all/some of the controls listed below:

The problem is, with project funding limitations, many of the required controls may not get approved. And the fraudster’s goal is to do as much damage with the least effort and cost. So, the large financial institutions get more controls and see less fraud in account opening, while the medium size and smaller institutions have fewer controls and get more fraud. The problem is, fraudsters understand this and will therefore proportionately attack the medium and smaller size institutions with smart, efficient bots to open fraudulent new accounts. They might do 500 new accounts per day, not 5,000. But all of their “tricks” will be deployed, and this really puts the medium/small institutions at risk.

Breaking bad bots

Now, how real are these bot capabilities? In November, Arkose released a study on bots—Breaking (Bad) Bots: Bot Abuse Analysis and Other Fraud Benchmarks in Q4 2023. Here are some of the key takeaways:

- 164% increase in bot-driven fake new bank accounts.

- A significant uptick in the past six months of GenAI being used for content generation by bad actors. GenAI is also being used for web scraping.

- From Q1 2023 to Q2 2023, intelligent bot traffic nearly quadrupled and heavily contributed to a total increase of approximately 167% for all bot traffic.

- There was a 49% increase in human fraud farm attacks from Q1 to Q2 2023. And 40% of fraud farm attacks were for fake account registration.

- Throughout 2023, financial service fake account registration steadily increased. It grew 30% in Q3 over Q2 2023.

- Bot attacks via mobile devices grew in 2023, where they are now 44% of all bot attacks.

Summary

In this blog, I took a different perspective on defining the risks associated with online account opening for a bank, by looking at what the fraudster is actually doing to create account opening transactions. This narrative would be true for opening accounts in e-commerce, online gaming, dating sites, new credit card or loan applications, etc. I wanted to highlight the new ways fraudsters achieve their goals of being effective and low cost to get what they want.

And with automation and new capabilities like GenAI, which has the potential to dramatically change how fraudsters can attack online account opening and other online services, they really are doing some amazing things. As fraud fighters, we have to also do amazing things to counter these criminals.

Contact us today to learn more about protecting your business.