What is New Account Fraud?

New account fraud involves creating fake accounts using stolen or synthetic identities to carry out fraudulent activities. This type of fraud is increasingly common in the digital age, posing serious risks to both businesses and consumers.

Why Is New Account Fraud a Growing Threat?

Several factors contribute to the rise of new account fraud, making it a growing concern for many industries.

- Easily Accessible Personal Data: Stolen personal information is widely available through data breaches or on the dark web.

- Sophisticated Fraud Tools: Fraudsters use advanced tools like bots to create fake accounts quickly and at scale.

- Increased Online Services: As more services move online, the opportunities for new account fraud expand, increasing the risk for businesses.

Check out our New Account Fraud Solution Page.

How New Account Fraud Happens

The process of new account fraud typically involves a few key steps, each designed to bypass security measures and exploit vulnerabilities.

- Acquiring Personal Data: Fraudsters obtain the necessary personal information through breaches, phishing, or purchasing data.

- Creating Fake Accounts: Using automated tools, they set up fake accounts to exploit digital platforms.

- Executing Fraudulent Activities: These accounts are then used to commit various fraudulent acts, such as financial scams or identity theft.

How Do Fraudsters Create Fake Accounts?

Fraudsters employ various tactics to create fake accounts, often leveraging stolen data and sophisticated methods to evade detection.

- Data Breaches and Identity Theft

Fraudsters use stolen personal information from data breaches to create fraudulent accounts. This data allows them to mimic legitimate account holders, making the fake accounts appear authentic.

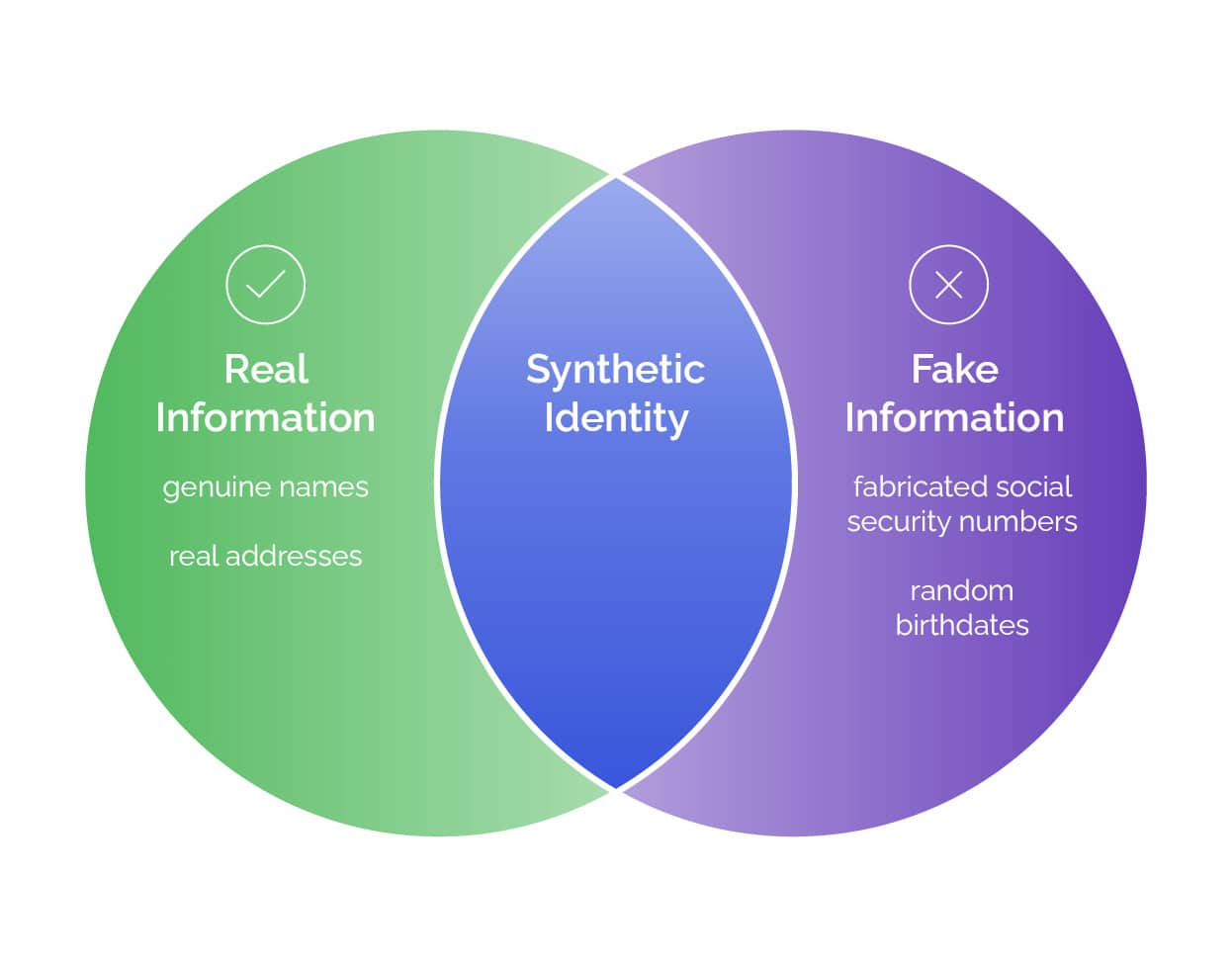

- Synthetic Identities

They also create synthetic identities by combining real and fake information, making it harder for traditional security measures to detect these fake profiles.

- Botnets and Human Click Farms

Automated bots and human-operated click farms are used to scale the fraud. Bots can create thousands of fake accounts quickly, while human click farms handle tasks that require more nuance, such as writing fake reviews or testing stolen credit card credentials.

Which Industries Are Most Vulnerable to New Account fraud?

New account fraud affects various industries, each facing unique challenges and risks due to the nature of their operations.

Banking and Financial Services

Banking and Financial Services

Banks are prime targets for fraudsters who create fake accounts to launder money and conduct unauthorized transactions, leading to significant financial and reputational damage.

E-commerce and Retail

E-commerce and Retail

Fraudsters exploit promotions and discounts by creating fake accounts to make fraudulent purchases, harming both businesses and legitimate customers.

Online Gaming and Gambling

Online Gaming and Gambling

Fake accounts are used to cheat, exploit bonuses, and launder money, undermining the integrity and fairness of gaming platforms.

Healthcare

Healthcare

In the healthcare sector, fraudsters create fake accounts to file false insurance claims and gain unauthorized access to medical services, compromising patient data and increasing costs.

Telecommunications

Telecommunications

Fraudsters target telecom companies by creating fake accounts to acquire expensive devices and services fraudulently, leading to inventory losses and higher operational costs.

How to Detect New Account Fraud

Detecting new account fraud involves identifying red flags and using specialized detection and mitigation software. Early detection is key to preventing fraud from escalating into more significant issues. By monitoring unusual behaviors and patterns during the account creation process, businesses can identify potential threats and act swiftly.

Enhance your fraud detection strategy with our powerful account takeover detection and mitigation software and stay one step ahead of fraudsters.

What Are the Red Flags of New Account Fraud?

- Unusual Identity Information

Inconsistencies in the personal details provided, such as mismatched names or addresses, can indicate fraudulent activity.

- High-Risk Locations

Account applications originating from high-risk geographic areas or sudden location changes should be closely monitored.

- Unusual Account Activity

Rapid or suspicious changes in account behavior, like large transactions soon after account creation, are significant indicators of potential fraud.

Using New Account Detection & Mitigation Software

Specialized software plays a crucial role in identifying and mitigating new account fraud by analyzing patterns and behaviors in real time. These tools, like Arkose Labs' mitigation software, can detect suspicious activities early, stop fraudulent account creation, and reduce the risk of downstream fraud.

By implementing such advanced solutions, businesses can effectively protect themselves from the financial and reputational damages associated with new account fraud.

Protect your business from automated threats with our cutting-edge bot management software, designed to stop bots and secure your platform.

How to Prevent New Account Fraud

Preventing new account fraud requires a combination of advanced strategies, including the use of security tools and attack detection and mitigation software. By leveraging these technologies, businesses can protect themselves against fraudulent account creation and the associated risks.

What Strategies Can Businesses Implement?

- Smarter Authentication with Targeted Friction: Balance user experience with fraud prevention by applying friction where necessary to deter fraudsters without disrupting legitimate users.

- Multi-Factor Authentication (MFA): Add layers of security by requiring multiple forms of verification, making it harder for fraudsters to gain unauthorized access.

- Real-Time Monitoring: Monitor account activity as it happens to catch fraudulent behavior early and prevent further damage.

How Can New Account Detection & Mitigation Software Help Prevent Fraud?

Advanced Software Solutions: Tools like Arkose Labs' software use AI, machine learning and behavioral analysis to proactively identify and prevent new account fraud, ensuring that businesses stay ahead of evolving threats.

Discover how our industry-leading account takeover detection and mitigation software can enhance your fraud prevention strategy.

Arkose Labs: Leading the Fight Against New Account Fraud

Arkose Labs offers robust solutions to detect, mitigate and prevent new account fraud by combining advanced technologies like advanced ingestion signals and AI-resistant challenges.

These tools allow businesses to accurately differentiate between legitimate users and fraudsters, reducing false positives and improving overall security.

Arkose Labs’ success stories highlight its effectiveness in stopping fraud at scale, ensuring businesses maintain their integrity and customer trust while preventing financial and reputational damage. Request a demo to see how Arkose Labs can protect your business.