Why Arkose Titan

The Only Platform That Detects, Prevents, and Neutralizes Fraud at Every Touchpoint

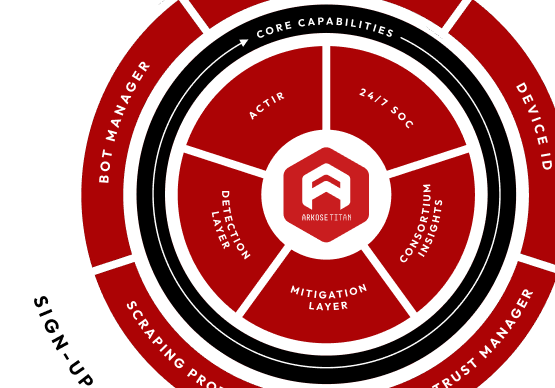

Arkose Labs delivers the industry's only unified fraud prevention platform that protects your entire digital ecosystem against both traditional and emerging AI threats—while maintaining the seamless customer experience that drives growth.