Welcome to the Arkose Labs AWS EKS cost savings blog! Join us as we explore the tools, checklists, and best practices that enabled us to achieve over 40% reduction in Compute Costs. Our journey highlights the importance of the Cost Optimization Pillar, one of the five design principles in the AWS Well-Architected Framework for maximizing the benefits of the public cloud.

Although there's no such thing as a free public cloud service, AWS offers tools to help you monitor your spending. With the Arkose Labs platform, we analyzed our spending and identified areas for improving compute efficiency. Here are the five areas that yielded the highest return on investment:

- Graviton - ~25% cost performance reduction

- Spot instances - 60% to 70% reduction

- Bin Packing - Choose right instances to reduce un-allocated resources

- Resource limits - POD CPU and Memory limits to optimize resources

- Autoscale - Adjust compute resources based on cyclical traffic

About Graviton

Graviton is an ARM based chip, based on RISC architecture. We performed load testing benchmarks of our applications on ARM and x86/AMD with similar configuration. P90 and P95 latency was lower by 22% on ARM.

Additionally, Graviton chips are cheaper than x86/AMD. For example, a c5.4xlarge x86 EC2 instance is $0.68/hr, while a c6g.4xlarge Graviton instance is $0.54/hr, 25% price difference. Most modern languages provide ARM compatible libraries. Arkose Labs performed exhaustive testing to validate application performance to avoid any surprises.

How to run Graviton on EKS

Graviton is a type of processor that can offer significant performance improvements and cost savings for running applications on AWS EKS. However, getting started with Graviton can be challenging for those who are new to the technology. Here’s what we did:

- Established Graviton AMI by updating our image creation scripts to use ARM packages. All the libraries we used supported ARM binaries.

- Created new Autoscaling groups with ARM EC2 instances.

- Updated application docker image creation process to build for ARM architecture.

- name: Set up Docker Buildx

uses: docker/setup-buildx-action@v2

- name: Build and push

uses: docker/build-push-action@v3

with:

context: .

push: true

tags: ${{ env.ECR_REGISTRY }}/${{ env.ECR_REPOSITORY }}:${{ env.IMAGE_TAG }}

platforms: |

linux/arm64

file: Dockerfile

(Github actions snippet)

Spot instances

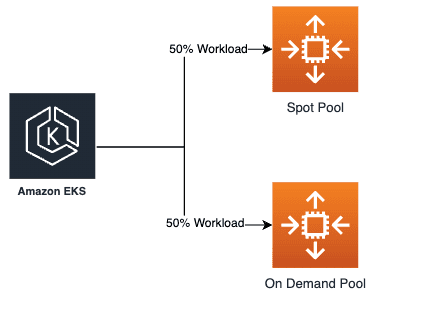

AWS Spot Instances are a type of cloud computing service offered by Amazon Web Services (AWS) that allow users to bid on unused EC2 instances, which can then be used for a variety of computing tasks such as data analysis, machine learning, and web hosting. Because the instances are unused, they are available at a significantly lower cost than on-demand instances, making them an attractive option for users who need computing power but want to keep costs low.

However, spot instances can be interrupted if the market price for the instances exceeds the bid price, so they are best suited for fault-tolerant applications that can handle interruptions and can be paused and resumed without losing data. Overall, AWS Spot Instances provide a flexible and cost-effective way for users to access high-performance computing resources in the cloud.

Spot instances were a good fit for Arkose Stateless applications. However, we decided to run Spot and on-demand 50/50 mix to avoid service outages. EKS managed node groups don’t support on-demand and spot mix. We ended up creating two Auto Scaling groups, one with Spot and the other with on-demand. Used Kubernetes topology constraints to spread the workload evenly.

topologySpreadConstraints:

- maxSkew: 1

topologyKey: eks.amazonaws.com/capacityType

whenUnsatisfiable: ScheduleAnyway

labelSelector:

matchLabels:

app.kubernetes.io/instance: sample-app

Note: We choose the “ScheduleAnyway'' option to handle Spot stock-out use cases. The above configuration gives a best effort on the spread. We later implemented a descheduler for Kubernetes to ensure periodical workload rebalancing.

Bin Packing

In Kubernetes, bin packing is achieved using a scheduler that takes into account the CPU and memory requirements of containers, as well as the available resources on nodes. The scheduler evaluates the current state of the cluster and attempts to place new containers on nodes with available resources that best match their requirements.

Much like a game of Tetris, bin packing helps to minimize the number of nodes required to run a Kubernetes cluster, which can reduce costs and simplify management. By optimizing resource utilization, bin packing can also improve the performance of applications running on Kubernetes by ensuring they have access to the resources they need.

Overall, Kubernetes bin packing is a valuable technique for efficiently managing resources in Kubernetes clusters, enabling users to run more applications with less hardware and reducing costs while maintaining high levels of performance and reliability.

Bin Packing with KubeCost

As part of Cost analysis, we installed KubeCost to analyze our Kubernetes spend. We found that our bin packing was not optimal due to cyclical traffic in various regions. Additionally, daemon sets for monitoring, security, EKS were consuming a significant amount of resources, as they run on every node. We changed our instance types from 2xlarge to 4xlarge, a shift that reduced daemon set consumption by half. This is another benefit of reducing node count—most of the third party vendors' costs are reduced as they charge by node.

However, there is one concern in EKS that all pods of an application can end up on the same node. Again, we used topology spreads for this. It’s worth noting, pods can be spread one/node. However, this can increase the node count when an application has run away scale-up. Spreading across three AZs is a good compromise.

topologySpreadConstraints:

- maxSkew: 1

topologyKey: topology.kubernetes.io/zone

whenUnsatisfiable: ScheduleAnyway

labelSelector:

matchLabels:

app.kubernetes.io/instance: sample app

Resource Limits

We found that EKS EC2 instances were not being utilized efficiently with the help of KubeCost. It has detailed analysis by namespace on the resource requests, limits and the runtime usage. Additionally, it also provided us with the recommendation on the resource request and limits.

We conservatively updated application resource requests and limits and tested our applications. After some tuning, we deployed resource changes to production and improved our CPU and memory efficiency by 20%.

containers:

- name: app

image: image

resources:

requests:

memory: "512Mi"

cpu: "250m"

limits:

memory: "1Gi"

cpu: "500m"

Kubernetes resource limits allow users to set constraints on the amount of CPU and memory resources that a container or pod can use within a Kubernetes cluster. These limits help ensure that applications running on Kubernetes are not able to consume excessive resources, which can negatively impact the performance and stability of other applications on the same cluster.

By setting resource limits, we optimized resource utilization and ensured that applications have access to the resources they need to run smoothly, while preventing runaway containers from monopolizing resources and causing disruptions. This enables users to run more applications on the same cluster, and provides better control over resource usage and costs.

Overall, Kubernetes resource limits are a critical tool for managing resources in Kubernetes clusters, allowing users to optimize performance, ensure stability, and prevent resource overuse.

Autoscale

Arkose Labs traffic is cyclical on a daily basis, similar to other organizations. Less traffic at night, more traffic during the day—plus, some unexpected traffic spikes. We wanted to optimize on the cost, but without negatively affecting our service performance.

We used CPU as an initial metric for autoscaling, which worked for a while. But, it's a lagging indicator on the load on the system. We switched to the external connections metric from Splunk to use the leading indicator. This change helped us to reduce buffers in the cluster and be truly elastic.

spec:

behavior:

scaleDown:

policies:

- periodSeconds: 600

type: Percent

value: 10

- periodSeconds: 600

type: Pods

value: 4

selectPolicy: Min

stabilizationWindowSeconds: 600

scaleUp:

policies:

- periodSeconds: 60

type: Percent

value: 25

- periodSeconds: 60

type: Pods

value: 4

selectPolicy: Max

stabilizationWindowSeconds: 0

maxReplicas: 10

metrics:

- external:

metric:

name: rpm_per_pod

target:

type: Value

value: '2000'

type: External

- resource:

name: cpu

target:

averageUtilization: 80

type: Utilization

type: Resource

Conclusion

At the end of the day, reducing AWS EKS compute costs can be a challenging task, but implementing these five strategies can significantly lower your expenses without sacrificing performance or reliability. By regularly monitoring and optimizing your resources, choosing the right instance types, leveraging spot instances, minimizing data transfer costs, and utilizing auto-scaling, you can achieve greater cost efficiency in your EKS environment. With the help of these cost-saving measures, you can better manage your AWS spend and allocate more resources to innovation and growth.

Want to find out more about how Arkose Labs is protecting businesses from today’s advanced threats and cyberattack? Book a demo!